If we are loading it just one time, we do not need to include overwrite.

Download spark with hive manual#

Please refer the Hive manual for details. If we are using a hadoop directory, we have to remove local from the command below. We can load data from a local file system or from any hadoop supported file system. Now let’s load data into the movies table. |OutputFormat |.ql.io.orc.OrcOutputFormat | | |InputFormat |.ql.io.orc.OrcInputFormat | | |Location |file:/home/fish/MySpark/HiveSpark/spark-warehouse/movies.db/ratings| | Spark.sql("describe formatted ratings").show(truncate = False) But now, we see even its location, the database and other attributes. If we do not include formatted or extended in the command, we see only information about the columns. We can get information about a table as below. Now, let’s see if the tables have been created.

We will insert count of movies by generes into it later. Let’s create another table in AVRO format. (userId int,movieId int,rating float,timestamp string)\ Stored as textfile') # in textfile format Row format delimited fields terminated by ","\ (movieId int,title string,genres string) \

Download spark with hive how to#

Please refer to the Hive manual for details on how to create tables and load/insert data into the tables. The rating dataset, on the other hand, as userId, movieID, rating and timestamp fields. The movies dataset has movieId, title and genres fields. But first, we have to make sure we are using the movies database by switching to it using the command below. Now, let’s create tables: in text file format, in ORC and in AVRO format. I am using Jupyter Notebook so ! enabes me to use shell commands. Let’s check if our database has been created. We will use movies, ratings and tags data sets. The data we will use is MovieLens 20M Dataset. |Usage: instr(str, substr) - Returns the (1-based) index of the first occurrence of `substr` in `str`.| Spark.sql("describe function instr").show(truncate = False) For i in fncs:īy the way, we can see what a function is used for and what the arguments are as below. Fncs = spark.sql('show functions').collect() At the time of this writing, we have about 250 functions. We can see the functions in Spark.SQL using the command below. However, at this point, we do not have any database or table. Now, we can use Hive commands to see databases and tables. Now, as you can see above, metastore_db has been created. Now, let’s check if metastore_db has been created. ['Leveraging Hive with Spark using Python.ipynb', – we will also see how to save data frames to any Hadoop supported file system import os In this blog post, we will see how to use Spark with Hive, particularly: Further, when we execute create database command, spark-warehouse is created.įirst, let’s see what we have in the current working directory. When not configured by the Hive-site.xml, the context automatically creates metastore_db in the current directory.Īs shown below, initially, we do not have metastore_db but after we instantiate SparkSession with Hive support, we see that metastore_db has been created.

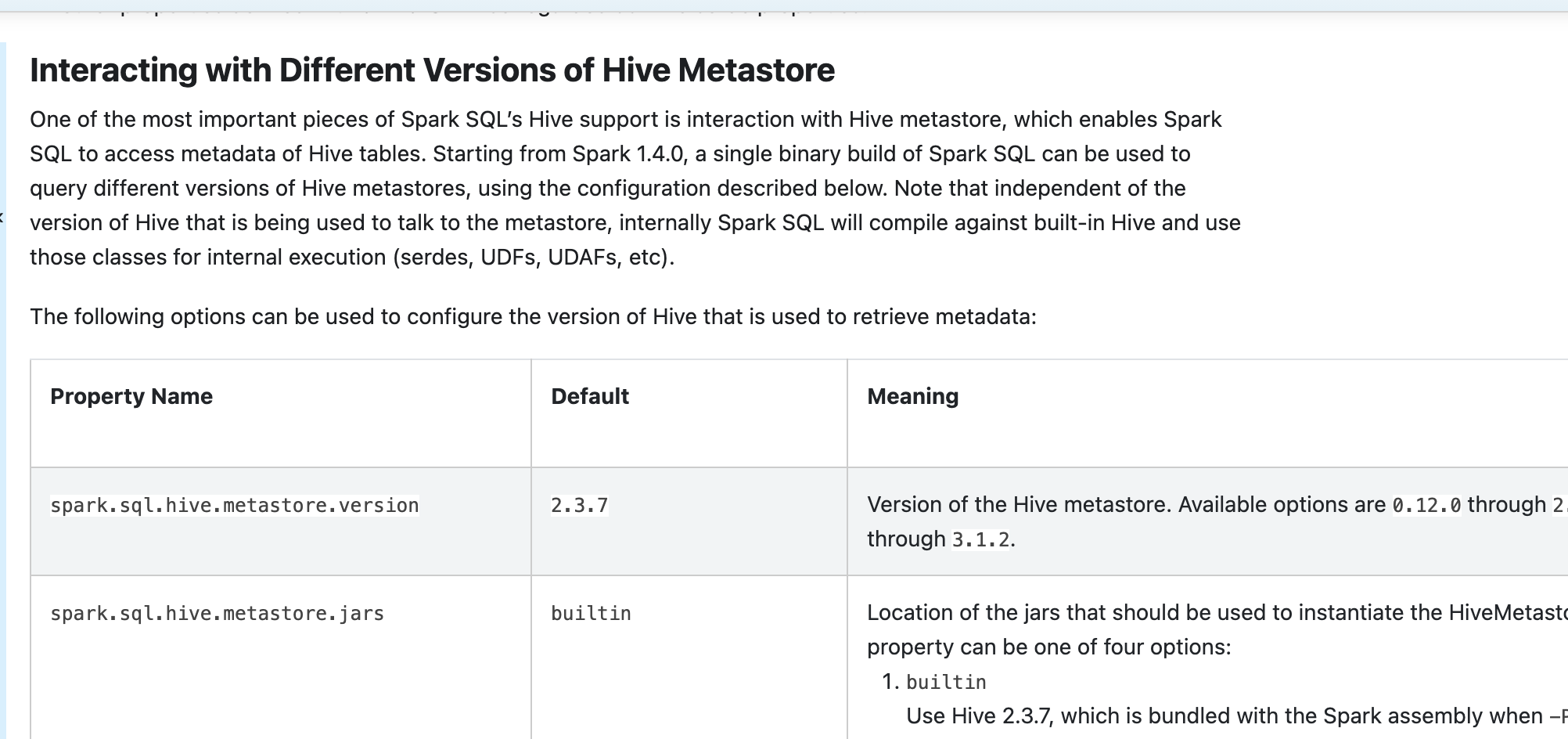

In this tutorial, I am using standalone Spark. Even when we do not have an existing Hive deployment, we can still enable Hive support. If we are using earlier Spark versions, we have to use HiveContext which is variant of Spark SQL that integrates with data stored in Hive. To work with Hive, we have to instantiate SparkSession with Hive support, including connectivity to a persistent Hive metastore, support for Hive serdes, and Hive user-defined functions if we are using Spark 2.0.0 and later.

0 kommentar(er)

0 kommentar(er)